How Fil Menczer and the Observatory on Social Media are advancing research to address the challenges of misinformation on social media.

As we approach another election cycle, the spread of misinformation on social media is a prominent concern. Rather than combating misinformation with physical force, experts are using tools like algorithms and bots to monitor and analyze the ways in which misleading content shapes public perception.

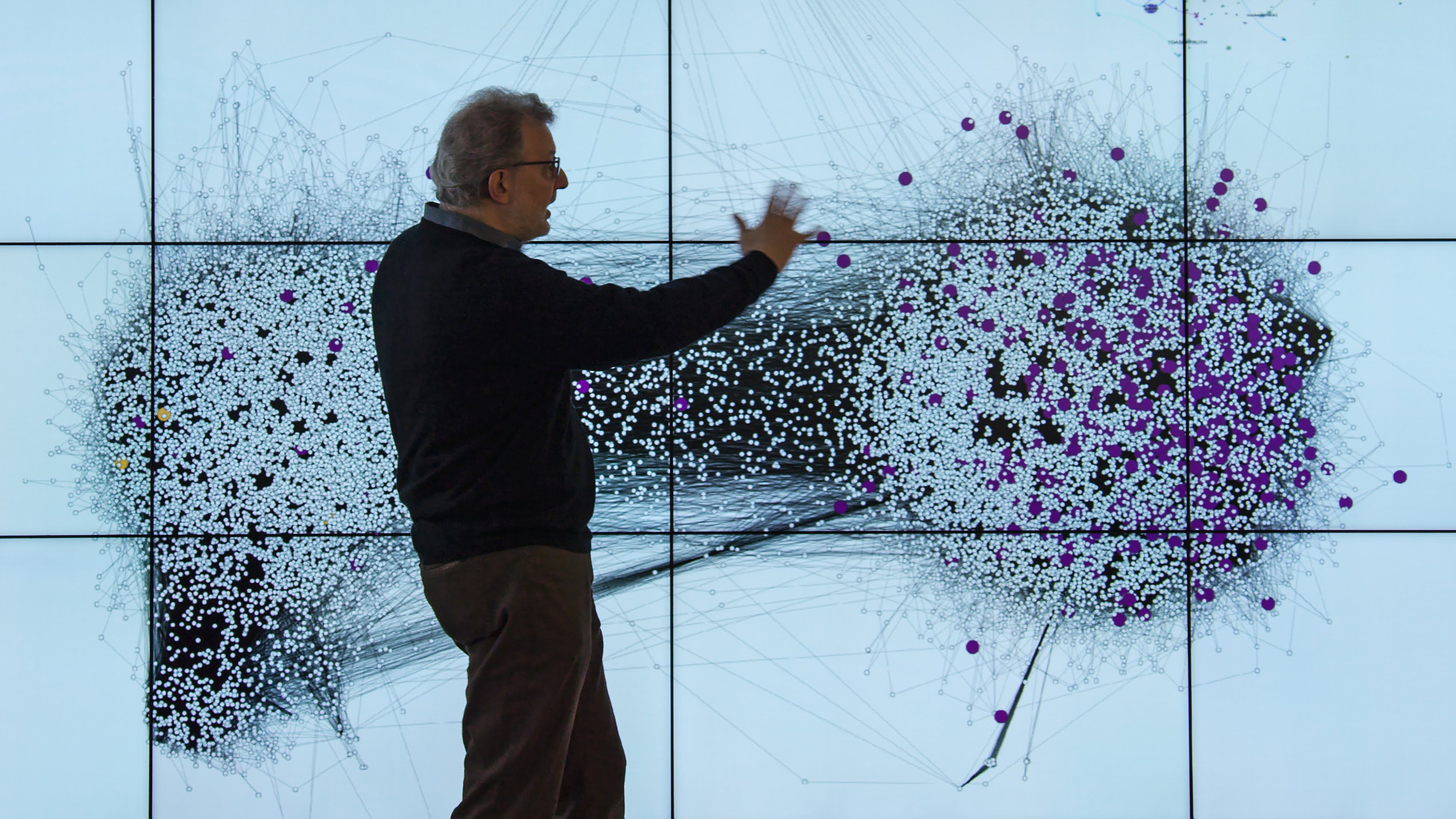

One leading figure is Fil Menczer, the director of the Observatory on Social Media (OSoMe, pronounced “awesome”), a multi-disciplinary research center at Indiana University. Menczer, a Luddy Distinguished Professor of Informatics and Computer Science, guides a team dedicated to empowering journalists, researchers, and citizens to identify online manipulation and make informed decisions online.

“Social media distorts our view of the world in ways that are hard for us to understand,” Menczer says. “And in today’s polarized political and social landscape, that distortion is especially dangerous, leaving citizens vulnerable to manipulation and long-standing institutions at the foundation of democracy on increasingly shaky ground."

Our research helps understand what countermeasures can preserve the integrity of the information ecosystem.

Fil Menczer

Understanding and addressing disinformation

Standing in the middle of this toxic social media stew, Menczer is focused on uncovering and combating the false conspiracy theories and disinformation campaigns that spread like wildfire across platforms like X (formerly Twitter). One recent example highlights the challenge: in the wake of the back-to-back hurricanes that blasted Florida this fall, a false narrative began circulating across social media that disaster relief funds were being diverted to the war in Ukraine. It spread fast — fueled by social media algorithms designed to prioritize sensational stories over truth.

“We're more vulnerable than we think,” Menczer warns. And that's the heart of the issue. Whether liberal or conservative, college-educated or not, urban or rural — no one is immune to the influence of social media's distortions, he says.

Since its creation in 2019, the Observatory has been tracking how misinformation influences communities and public discourse, aiming to provide resources that help people better navigate online content.

Disinformation superspreaders

The strategies these bad actors deploy have evolved over time. Before OSoMe was officially a university center, Menczer and his team were already studying them. They were among the first to discover “social bots” — automated accounts programmed to spread false information — during the 2010 midterm elections. Since then, the threat has only intensified, and now, artificial intelligence (AI) is being deployed to supercharge these efforts.

Menczer and his team have identified massive amounts of disinformation spreading virally. The situation has worsened this election cycle on platforms such as X, which has relaxed content moderation and allowed disinformation “superspreaders,” as Menczer calls them, to return in full force.

“These are powerful influencers with political or financial incentives to spread corrosive, polarizing, hateful, and harmful narratives,” he says. “People are fed simple but false explanations — blame minorities, immigrants, scientists, or the media.”

OSoMe’s latest findings prove that to be true and highlight the scale of the problem. They have uncovered networks of AI-generated accounts on thousands of fake profiles sporting AI-generated faces designed to push coordinated, false messages. Menczer estimates that more than 10,000 of these accounts are active daily, manipulating conversations and spreading propaganda.

And it doesn’t stop there.

AI-generated content from technology such as ChatGPT has been used to steer people to fake news websites. Traditional detection tools have proven virtually powerless so far in efforts to differentiate between human and bot-generated content.

Let's Expose the Truth

Description of the video:

My name is Filippo Menczer,and I'm the director of OSoMe, which is our Observatory on Social Media

here at Indiana University. The type of misinformation that we're

interested in is the misinformation that spreads virally. It can spread organically

because people believe it or it can also be pushed by

coordinated campaigns or by people who are specifically

trying to manipulate opinions. The information that we access

affects our opinions, and then those opinions affect how we vote. And those votes affect

the policies that we enact. So a well working democracy

relies on informed voters. And so if we can manipulate social media

then we can manipulate people's opinion and we can manipulate democracy. An example of a misinformation campaign

was when during the midterm elections in 2018 there were tweets going around

saying that men should not vote as a way to respect women. And these were spreading virally. And some of the tools that we developed

in our lab were used to uncover them and flag them to the social media companies,

who then took them down. They were at least 10,000 bot

accounts that Twitter took down that were spreading

this misinformation. It is really important to understand

how people consume information and how they consume misinformation. And that's why we realized that we

needed to partner with The Media School, because we want our tools not

just to be used in a lab but to be used by the general population. And to be used by journalists so that they

can understand what's true, what's not. It is really important to do what we can

to try to understand how the manipulation happens and counter it, so that people can

actually have access to real information from real journalists about the real world

and their opinions can be based on facts. We've been working on

research to understand how social media are manipulated and

how misinformation spreads for many years. As we develop better countermeasures,

people will develop better attacks. And this is normal in

this kind of problem. So what's important for all of us to know

is that we, all of us, we are vulnerable. It doesn't matter how smart we are,

and how skeptic we are, and how we think that we can do

our own research on Google. We can be manipulated.

Promoting information integrity

Despite these challenges, Menczer is optimistic. He believes that misinformation can be addressed through tools and education, encouraging people to approach social media with caution.

“The advice I give people is to use social media to keep in touch with friends and family,” he says. “Social media is a bad way to access news and political information because we get a view that is biased and distorted by echo chambers. We tend to get anxious and angry and lose objectivity.”

That’s where OSoMe's cutting-edge tools come into play. For example, Botometer X, analyzes historical data to detect bots on X. Facebook News Bridge, an AI-powered browser extension flags potentially false posts and offers responses aimed at bridging political divides. These tools, free and accessible on the OSoMe website, are meant to equip individuals with countermeasures to recognize misinformation and make informed decisions.

At the heart of OsoMe’s mission is a team of Indiana University students. From analyzing data to developing new tools. Their work is critical to the center’s success, helping to build a more informed and resilient public.

“We have tools that highlight disinformation superspreaders, visualize information operations, and train young people to recognize low-quality sources,” Menczer says. “I am proud of all these tools and of the students and staff in our center who design and develop them.”

Looking forward

Misinformation’s impact reaches far beyond elections, affecting trust, health, and public safety. “Our research helps understand what countermeasures can preserve the integrity of the information ecosystem,” Menczer says. “This is not only necessary to preserve our democracy but also to mitigate the harms of disinformation in critical domains like public health, where it can and does kill people.”

Despite the enormity of the challenge, Menczer believes that, with the right tools and media literacy skills, people can fight back. OSoMe’s work is creating hope in a landscape that often feels dominated by bad actors and falsehoods.

Our research helps understand what countermeasures can preserve the integrity of the information ecosystem.

Fil Menczer

Despite the enormity of the challenge, Menczer believes that, with the right tools and literacy skills, people can fight back. OSoMe’s work is creating hope in a landscape that often feels dominated by bad actors and falsehoods.

“Political propaganda and misinformation have always existed,” Menczer says. “But social media has made us more vulnerable. It is not good for our health or our democracy.”